What are crawlers?

Crawlers, also known as spiders or bots, are sophisticated automated software programs designed to systematically browse and index the vast expanse of the Internet. Their primary function is to help search engines understand, categorize, and rank web pages based on their relevance and content. This process is vital for search engines to deliver accurate search results to users. By continuously scanning web pages, crawlers build a comprehensive index that search engines like Google utilize to deliver precise and relevant search results.

Web crawlers are essentially the eyes and ears of search engines, enabling them to see what is on each web page, understand its content, and decide where it fits in the index. They start with a list of known URLs and methodically work through each page, analyzing the content, identifying links, and adding them to their queue for future crawling. This iterative process allows crawlers to map the structure of the entire web, much like a digital librarian categorizing books.

How Do Crawlers Work?

Crawlers operate by starting with a seed list of URLs, which they visit and inspect. As they parse these web pages, they identify links to other pages, adding them to their queue for subsequent crawling. This process enables them to map the web’s structure, following links from one page to another, akin to a digital librarian categorizing books. Each page’s content, including text, images, and meta tags, is analyzed and stored in a massive index. This index serves as the foundation for search engines to retrieve relevant information in response to user queries.

Web crawlers work by consulting the robots.txt file of each webpage they visit. This file provides rules that indicate which pages should be crawled and which should be ignored. After checking these rules, crawlers proceed to navigate the webpage, following hyperlinks according to predefined policies, such as the number of links pointing to a page or the page’s authority. These policies help prioritize which pages are crawled first, ensuring that more important or relevant pages are indexed promptly.

As they crawl, these bots store the content and metadata of each page. This information is crucial for search engines in determining the relevance of a page to a user’s search query. The collected data is then indexed, allowing the search engine to quickly retrieve and rank pages when a user performs a search.

The Role of Crawlers in Search Engine Optimization (SEO)

For affiliate marketers, understanding the functionality of crawlers is essential for optimizing their websites and improving search engine rankings. Effective SEO involves structuring web content in a way that is easily accessible and understandable to these bots. Important SEO practices include:

- Keyword Optimization: Including relevant keywords in the page title, headers, and throughout the content helps crawlers identify the page’s subject matter, increasing its chances of being indexed for those terms. It is crucial for content to be keyword-rich but also natural and engaging to ensure optimal indexing and ranking.

- Site Structure and Navigation: A clear and logical site structure with interlinked pages ensures that crawlers can efficiently navigate and index content, improving search visibility. A well-structured site also enhances user experience, which can positively affect SEO.

- Content Freshness and Updates: Regularly updating content attracts crawlers, prompting more frequent visits and potentially enhancing search rankings. Fresh and relevant content signals to search engines that a website is active and its content is up-to-date.

- Robots.txt and Directives: Using a robots.txt file allows webmasters to instruct crawlers on which pages to index or ignore, optimizing crawl budgets and focusing on essential content. This file can be strategically used to ensure that only the most valuable content is indexed, conserving resources.

Crawlers and Affiliate Marketing

In the context of affiliate marketing, crawlers have a nuanced role. Here are some key considerations:

- Affiliate Links: Typically marked with a “nofollow” attribute, affiliate links signal to crawlers not to pass SEO value, which helps maintain the integrity of search results while still allowing affiliates to track conversions. This practice prevents the manipulation of search rankings by artificially inflating link value.

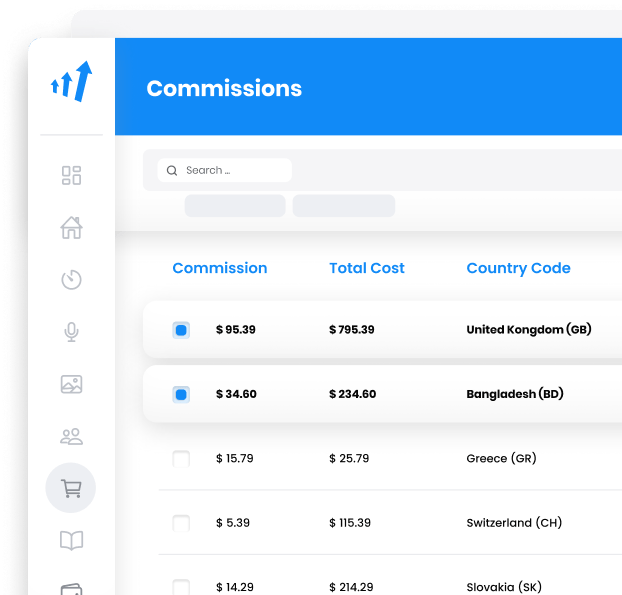

- Crawl Budget Optimization: Search engines allocate a specific crawl budget for each site. Affiliates should ensure that this budget is spent on indexing valuable and unique pages instead of redundant or low-value content. Efficient use of crawl budgets ensures that the most important pages are indexed and ranked.

- Mobile Optimization: With the shift to mobile-first indexing, it’s vital for affiliate sites to be mobile-friendly. Crawlers evaluate the mobile version of a site, affecting its search rankings. Ensuring a seamless mobile experience is crucial, as more users access the web via mobile devices.

Tools for Monitoring Crawl Activity

Affiliate marketers can utilize tools like Google Search Console to gain insights into how crawlers interact with their sites. These tools provide data on crawl errors, sitemap submissions, and other metrics, enabling marketers to enhance their site’s crawlability and indexing. Monitoring crawl activity helps identify issues that may hinder indexing, allowing for timely corrections.

The Importance of Indexing Content

Indexed content is essential for visibility in search engine results. Without being indexed, a web page won’t appear in search results, regardless of its relevance to a query. For affiliates, ensuring that their content is indexed is crucial for driving organic traffic and conversion rates. Proper indexing ensures that content can be discovered and ranked appropriately.

Web Crawlers and Technical SEO

Technical SEO involves optimizing the website’s infrastructure to facilitate efficient crawling and indexing. This includes:

Structured Data: Implementing structured data helps crawlers understand the content’s context, improving the site’s chances of appearing in rich search results. Structured data provides additional information that can enhance search visibility.

Site Speed and Performance: Fast-loading sites are favored by crawlers and contribute to a positive user experience. Enhanced site speed can lead to better rankings and increased traffic.

Error-Free Pages: Identifying and rectifying crawl errors ensures that all important pages are accessible and indexable. Regular audits help maintain site health and improve SEO performance.

Frequently Asked Questions

How can search engine crawlers be identified?

Search engine crawlers can be identified in a number of ways, including looking at the user-agent string of the crawler, examining the IP address of the crawler, and looking for patterns in the request headers.

How do web crawlers work?

Web crawlers work by sending out requests to websites and then following the links on those websites to other websites. They keep track of the pages they visit and the links they find so that they can index the web and make it searchable.

Why are web crawlers called spiders?

Web crawlers are called spiders because they crawl through the web, following links from one page to another.

How To Find Affiliates to Sell Your Products

Discover over 10 successful strategies for finding high-quality affiliates in 2024 to boost your product sales. Learn to leverage influencers, join affiliate networks, and enhance your reach through SEO and social media. Maximize revenue with transparency and ongoing monitoring in your affiliate marketing program.