Why Is Statistical Significance Important?

Discover why statistical significance matters in data analysis, research, and business decisions. Learn about p-values, hypothesis testing, and how to interpret...

Master A/B testing for betting affiliates: learn p-values, confidence levels, sample sizes, and strategies to optimize conversions.

Imagine you’ve been promoting a sports betting platform for six months, and you decide to test a new landing page headline that promises “Guaranteed Winning Picks” versus your current “Start Winning Today” headline. After just 50 clicks, the new headline gets 3 conversions while the old one gets 2—a 50% improvement that seems incredible. But here’s the problem: statistical significance is the difference between a real, repeatable improvement and pure luck. Statistical significance tells you whether your test results are genuine or just random noise in your data. For betting affiliates, this distinction directly impacts your commission checks—if you optimize based on luck instead of real patterns, you’ll waste time and traffic on changes that don’t actually improve your earnings. Understanding when results are statistically significant versus when they’re just random variation is the foundation of profitable A/B testing that compounds your affiliate income over time.

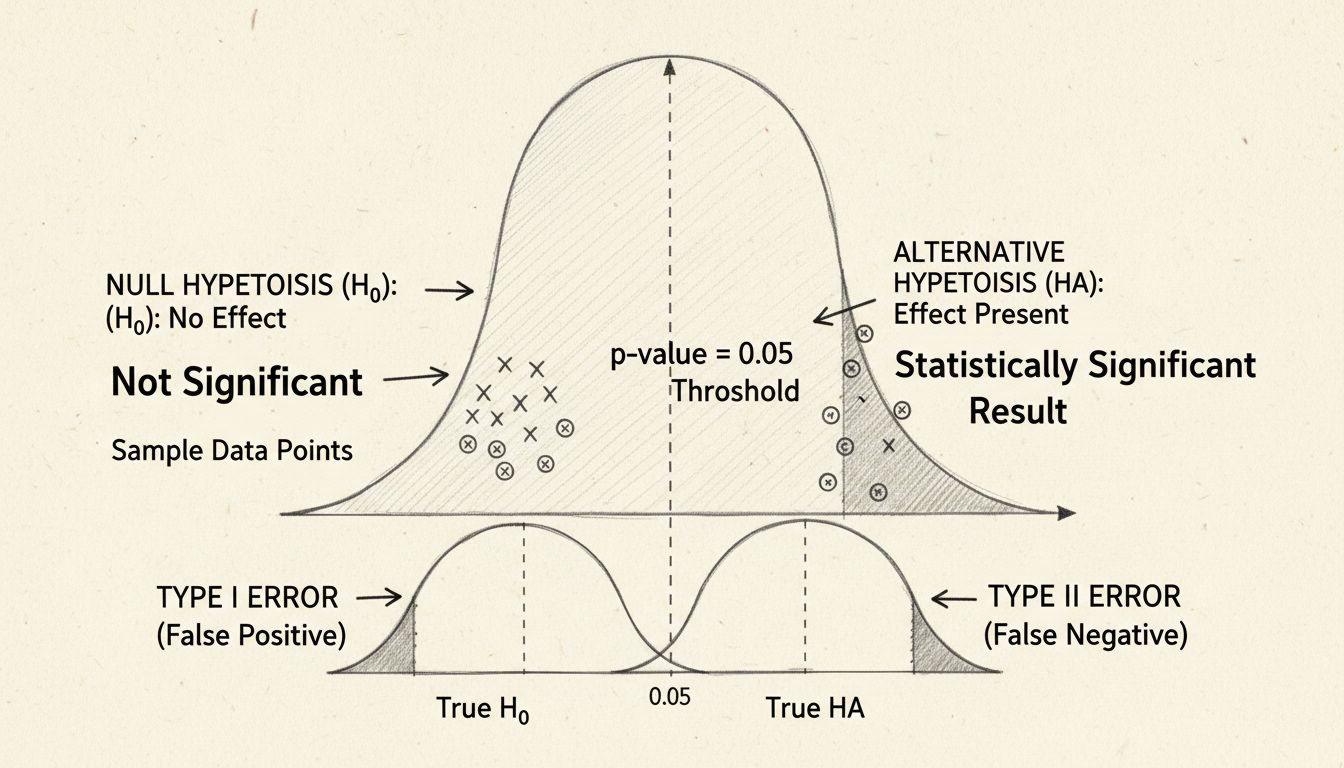

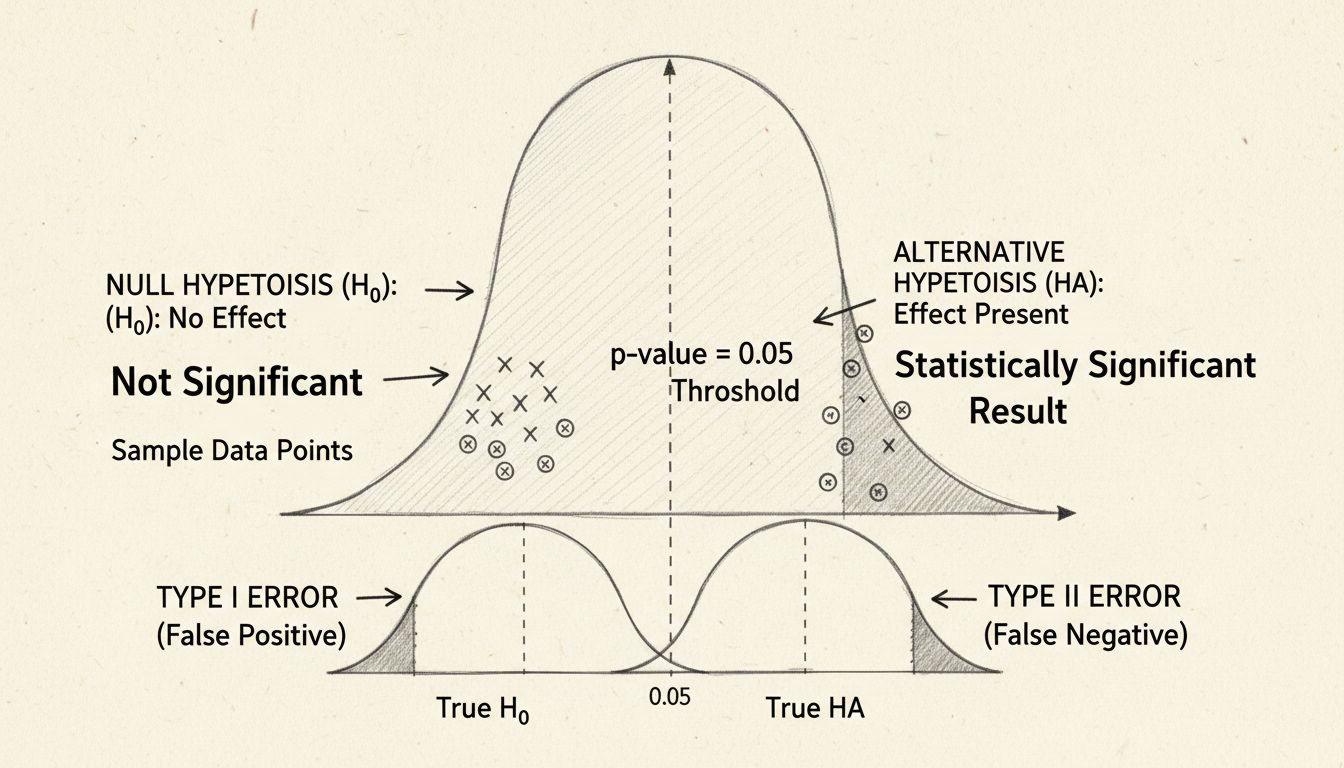

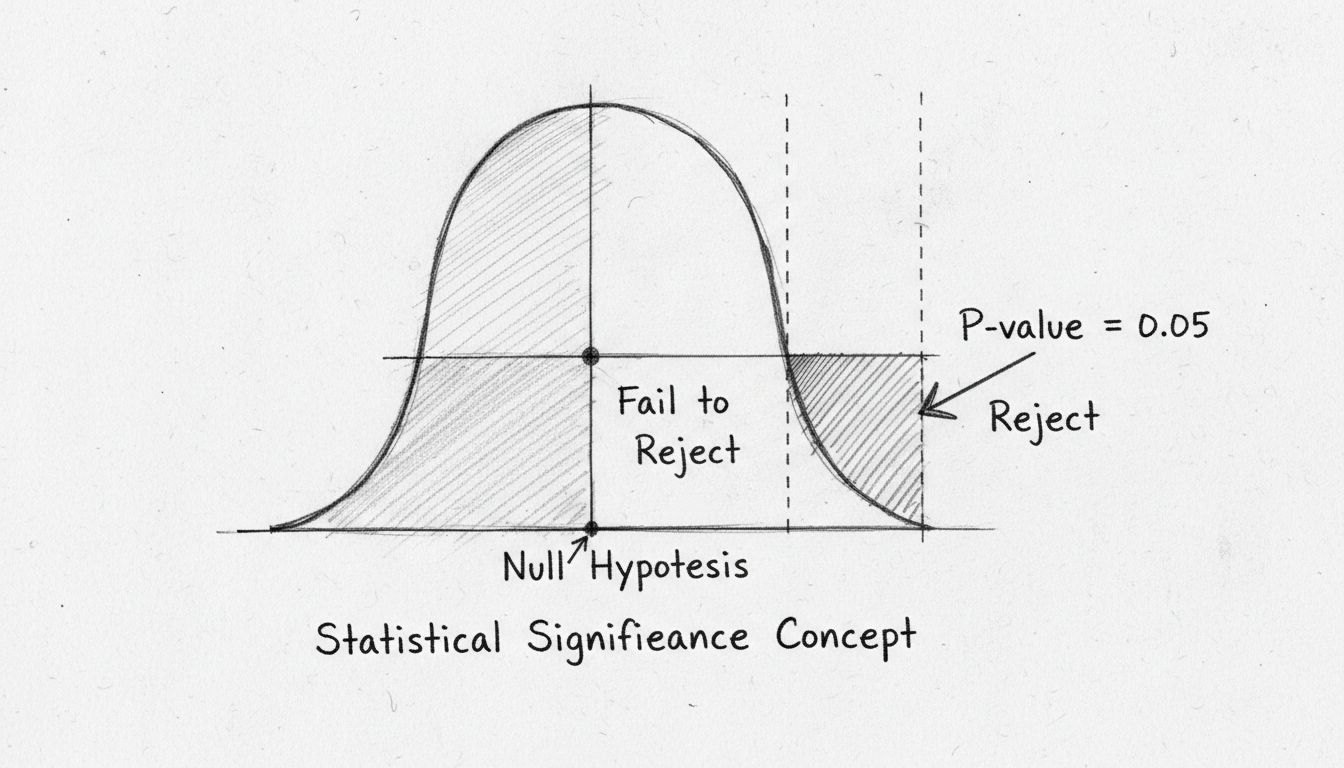

A p-value is essentially a probability score that answers this question: “If there were no real difference between my two variations, what’s the chance I’d see results this extreme just by random luck?” In betting affiliate marketing, if you’re testing two different CTA button colors and get a p-value of 0.05, that means there’s only a 5% probability you’d see this difference by chance alone—which is why 0.05 is the magic threshold most marketers use. Confidence level is the flip side of this coin: a 95% confidence level means you’re 95% certain your results are real and not random, which corresponds to that 0.05 p-value threshold. For example, if you test a new promotional offer (“Bet $10, Get $50 Free”) against your control and achieve a p-value of 0.03 with a 97% confidence level, you can be quite confident that this offer genuinely converts better than your previous “$25 Free Bet” promotion. The industry standard is 95% confidence (p-value of 0.05 or lower), though high-stakes betting affiliate campaigns sometimes require 99% confidence for major decisions. Think of it this way: a 95% confidence level means if you ran this exact test 100 times, you’d expect to see these results 95 times due to real differences and only 5 times due to pure chance.

| Confidence Level | P-Value | Risk of Random Chance | What It Means |

|---|---|---|---|

| 90% | 0.10 | 10% (1 in 10) | You are 90% sure the result is not random |

| 95% | 0.05 | 5% (1 in 20) | You are 95% sure the result is not random (Industry Standard) |

| 99% | 0.01 | 1% (1 in 100) | You are 99% sure the result is not random |

One of the most common mistakes betting affiliates make is declaring a winner too early with insufficient data. Sample size refers to the number of visitors, clicks, or conversions you need before your results become statistically reliable—and for betting affiliate campaigns, you typically need a minimum of 300 conversions per variation before you can trust your results. Statistical power is your test’s ability to detect a real difference when one actually exists; the industry standard is 80% power, meaning your test has an 80% chance of catching a genuine improvement if it’s there. Without adequate statistical power, you risk false negatives—situations where a variation actually performs better, but your test fails to detect it because you didn’t run it long enough. For instance, if you’re testing a new email subject line for your betting affiliate list ("⚡ Live Odds Alert: +250 Underdog Pick" versus “Weekly Sports Betting Tips”), you might see a 2% difference in click-through rates after just 100 clicks, but that difference could disappear once you reach 5,000 clicks. While statistical calculators exist to help you determine exact sample sizes based on your baseline conversion rate and desired improvement, the practical takeaway is simple: patience is profitable—rushing to implement changes based on small sample sizes will cost you money in the long run.

Running a proper A/B test for your betting affiliate campaigns follows a structured methodology that ensures your results are trustworthy and actionable:

Define Your Goal: Decide what metric matters most—click-through rate (CTR), conversion rate, revenue per visitor (RPV), or customer lifetime value. For betting affiliates, RPV is often more important than raw conversion rate since higher-value players generate more commission.

Isolate One Variable: Test only one element at a time (headline, button color, offer amount, or ad copy). Testing multiple changes simultaneously makes it impossible to know which change actually drove results.

Split Traffic Equally: Send 50% of your traffic to the control (original) and 50% to the variation (new version). Unequal splits introduce bias and reduce statistical power.

Run Until Statistical Significance: Continue the test until you reach your target sample size and achieve statistical significance (p-value ≤ 0.05 or 95% confidence). This might take days or weeks depending on your traffic volume.

Analyze Results Thoroughly: Look beyond just the primary metric—check for unexpected effects on other metrics, segment results by traffic source, and verify the improvement makes practical sense.

Implement the Winner: Once statistically significant, implement the winning variation across all traffic and document the improvement for future reference.

Plan Your Next Test: Use the insights from this test to inform your next hypothesis, creating a continuous cycle of optimization.

The most dangerous mistake betting affiliates make is “peeking” at results before reaching statistical significance—checking your test results daily and stopping early when you see a winner. This practice inflates your false positive rate dramatically; if you peek at results 10 times during a test, your actual confidence level drops from 95% to roughly 60%, meaning you’re making decisions based on noise rather than real patterns. Another critical error is testing during atypical traffic periods, such as running a betting affiliate test during a major sporting event (World Cup, Super Bowl, March Madness) when user behavior is completely different from normal conditions—your results won’t apply to regular traffic. Changing your test mid-stream—adjusting offers, modifying copy, or shifting traffic allocation—invalidates all previous data and forces you to start over. Using a sample size that’s too small is equally problematic; many betting affiliates declare winners after just 50-100 conversions, which is statistically meaningless and leads to implementing changes that are actually just lucky flukes. The discipline required for proper A/B testing is significant: you must commit to running tests for their full duration, resist the urge to tweak things, and accept that some tests will show no winner. This patience is what separates profitable betting affiliates from those who chase random variations and waste traffic on false improvements.

| Common Mistake | Why It’s a Problem | How to Avoid It |

|---|---|---|

| “Peeking” and stopping early | Increases false positives due to normal statistical fluctuations | Determine sample size before launch; don’t stop until target is reached |

| Testing during atypical traffic | Results won’t apply to normal business conditions | Schedule tests during regular weeks; avoid major sports events |

| Changing test mid-stream | Invalidates all data; impossible to know what caused results | If changes needed, stop test and launch new one with updated variation |

| Using small sample size | Results are statistically meaningless | Use sample size calculator; aim for minimum 300 conversions per variation |

Let’s walk through real-world A/B testing scenarios that betting affiliates commonly run. Landing page headlines are prime testing territory: comparing “Join 50,000+ Winning Bettors” (control, 3.2% conversion rate) against “Get Expert Picks Delivered Daily” (variation, 4.1% conversion rate) across 2,000 visitors per variation might show a statistically significant 28% improvement in conversions. CTA button text and color frequently surprise affiliates—testing a red “Claim Your Bonus” button against a green “Start Betting Now” button can shift conversion rates by 15-20% because color psychology affects urgency perception differently for different audiences. Promotional offers are critical for betting affiliates: testing “Bet $10, Get $50 Free” (control, 2.8% conversion) versus “Bet $10, Get $100 Free” (variation, 3.9% conversion) shows that higher bonuses drive more signups, but you must calculate whether the increased signup volume justifies the higher payout to your affiliate partner. Ad copy variations matter tremendously—comparing “Limited Time: Double Your First Deposit” against “New Players: 100% Match Bonus Up to $500” can reveal which messaging resonates with your audience segment. Email subject lines for your betting affiliate list are endlessly testable: “⚡ Live Odds Alert: +250 Underdog Pick” might achieve a 28% open rate versus “This Week’s Best Bets” at 18%, directly impacting how many people click through to your affiliate links. The key is tracking not just conversions but revenue per visitor (RPV)—a variation might increase signups by 10% but attract lower-value players, resulting in lower overall commission earnings.

When your A/B test reaches completion, follow this decision framework: First, check for statistical significance—if your p-value is above 0.05 (confidence below 95%), your results are inconclusive and you should not implement either variation as a permanent change. Second, consider practical significance—a statistically significant 0.5% improvement in conversion rate might be real, but if it only increases your monthly commissions by $15, it might not be worth the effort to implement. If your test is inconclusive (no statistical significance), you have three options: run the test longer to gather more data, increase traffic allocation to the test, or abandon the hypothesis and test something else. If your test shows a negative result (the variation performs worse), congratulate yourself for avoiding a costly mistake and move on to your next hypothesis—this is valuable information. If your test shows a positive, statistically significant result, implement the winner immediately and document the improvement percentage, sample size, and confidence level for your records. For betting affiliates specifically, always cross-reference statistical significance with your actual commission impact—a 5% conversion rate improvement on a low-commission offer might be less valuable than a 2% improvement on a high-commission offer. Create a simple framework: statistical significance + practical significance + commission impact = implementation decision.

Several platforms make A/B testing accessible without requiring advanced statistical knowledge. Unbounce specializes in landing page testing with built-in statistical significance calculators, making it ideal for betting affiliates who want to test multiple landing page variations quickly. Visual Website Optimizer (VWO) and Optimizely offer more advanced testing capabilities including multivariate testing (testing multiple elements simultaneously) and audience segmentation, useful when you want to test different offers for different traffic sources. Statsig provides statistical significance calculations and helps you avoid common testing mistakes through automated alerts when you’re peeking at results too early. Beyond testing platforms, sample size calculators (available through most testing platforms or as standalone tools) let you input your baseline conversion rate and desired improvement to determine exactly how long your test needs to run. Statistical significance calculators allow you to input your control and variation results to instantly see your p-value and confidence level. Most betting affiliates also integrate their testing with analytics platforms like Google Analytics or their affiliate network’s built-in reporting to track not just conversions but commission earnings. If you’re using PostAffiliatePro to manage your affiliate campaigns, you can integrate it with most major testing platforms to track which variations drive the highest-value players. When selecting tools, prioritize platforms that make statistical significance calculations automatic and transparent—this removes guesswork and keeps you focused on testing strategy rather than math.

Statistical significance is the difference between affiliate marketing based on data and affiliate marketing based on hope, and mastering this concept will compound your earnings over time. The most successful betting affiliates don’t rely on single brilliant ideas; instead, they build a continuous testing culture where every element of their funnel—from ad copy to landing pages to email sequences—is constantly being optimized based on real data. Each small improvement compounds: a 5% improvement in landing page conversion rate, combined with a 3% improvement in email click-through rate, combined with a 2% improvement in offer acceptance, creates a 10%+ overall revenue increase that directly multiplies your affiliate commissions. The path to betting affiliate success is paved with disciplined A/B testing, statistical rigor, and the patience to let data guide your decisions rather than gut feelings or competitor imitation. Start implementing statistical significance testing in your campaigns today using PostAffiliatePro to track your results, and watch your commission earnings accelerate as you optimize based on real patterns rather than random variation.

The industry standard is a p-value of 0.05 or lower, which corresponds to 95% confidence. However, the right p-value depends on your stakes—high-stakes decisions (like major offer changes) might require 0.01 (99% confidence), while low-stakes tests (like button color) can work with 0.10 (90% confidence). Always balance statistical rigor with practical business needs.

The duration depends on your sample size requirements, not an arbitrary timeframe. Calculate your needed sample size based on baseline conversion rate and desired improvement, then run until you reach that target. As a minimum, run tests for at least one full business cycle (typically one week) to account for day-of-week variations in betting behavior.

An insignificant result is not a failure—it's valuable information. It means you don't have enough evidence to say the variation is better than the control. Stick with your original version, document what you learned, and use those insights to formulate a stronger hypothesis for your next test.

No, test one variable at a time. Testing multiple changes simultaneously makes it impossible to know which change actually drove results. If you want to test multiple elements, use multivariate testing, but that's more complex and requires larger sample sizes.

The minimum is typically 300 conversions per variation, though this varies based on your baseline conversion rate and desired improvement. Use a sample size calculator to determine exact requirements for your specific campaign. Testing with fewer conversions risks false positives and false negatives.

Statistical significance means a real difference exists (p-value < 0.05). Practical significance means the difference is meaningful for your business. A 0.1% improvement might be statistically significant but not worth implementing if it only increases monthly commissions by $5.

Avoid testing during major sporting events when user behavior is atypical. Results from these periods won't apply to normal traffic conditions. Schedule tests during regular business weeks to ensure your findings are representative of typical betting affiliate audience behavior.

Use a sample size calculator (available through most A/B testing platforms) and input your baseline conversion rate, desired improvement percentage, and desired confidence level. The calculator will tell you exactly how many visitors or conversions you need. If you're unsure, aim for at least 300 conversions per variation as a safe minimum.

Track, test, and optimize every element of your betting affiliate funnel with powerful A/B testing integration and real-time conversion analytics. Make data-driven decisions that multiply your affiliate commissions.

Discover why statistical significance matters in data analysis, research, and business decisions. Learn about p-values, hypothesis testing, and how to interpret...

Learn how statistical significance determines whether results are real or due to chance. Understand p-values, hypothesis testing, and practical applications for...

Statistical significance expresses the reliability of measured data, helping businesses distinguish real effects from chance and make informed decisions, especi...

Cookie Consent

We use cookies to enhance your browsing experience and analyze our traffic. See our privacy policy.